This whitepaper is a summary of the IAB Tech Lab’s event on September 22nd, 2022, the second session in a series on the topic of Privacy Enhancing Technologies (PETs). A recording of this event can be watched back here, and registration for the third event, 8 Dec, 2022 can be found here.

PETs are the technologies that are focused on maximizing data security to protect consumer privacy and minimizing the amount of data processed. The term PETs is a broad definition that covers a range of technologies that are united by the goal of protecting personal information.

The IAB Tech Lab has a multi-year initiative addressing PETs

- 2022- Awareness: Focus on driving awareness of industry PETs proposals

- 2023- Action: Work with browsers/tech lab members to get PETs proposal(s) to market

- 2024- Adoption: Drive adoption of these frameworks

The IAB Tech Lab’s PETs Working group will continue to bring together experts to define and evolve standards and Open Source software projects as well as provide guidance on the use and integration of PETs into Adtech stacks. Register here to join the PETs working group.

1. Why PETs are important

The data that is available to fuel advertising is changing due to three forces in the industry:

- Global regulations, such as Europe’s General Data Protection Regulation (GDPR), Brazil’s Lei Geral de Proteçao de Dados (LGPD) and California’s California Consumer Privacy Act (CCPA) are giving people options to limit how data is shared with businesses.

- Cookies and device-based IDs are crumbling thanks to new data policies from platforms such as Apple’s SKAdNetwork and Google’s cookie deprecation. 40% of web traffic is already cookie-less, meaning the post-cookie world is effectively already here.

- Evolving consumers are expecting privacy by design and are choosing to opt out of receiving ads and data sharing.

As data availability shifts, how advertisers can achieve the fundamental use cases such as targeting, measurement and optimization are impacted, and marketers will need access to a portfolio of privacy safe solutions to satisfy these use cases.

2. Overview of techniques used by PETs

Most of the PETs solutions in ad tech use one or more of the below techniques:

- Secure Multi-Party Computation (MPC) – A technique that allows two or more entities to share encrypted data through multiple nodes/servers and extract insight but not learn anything about each other’s data.

- Trusted Execution Environment (TEE)- Have some similarities to MPCs but differ by enabling operation within a single server. TEEs allow data to be processed within a secure piece of hardware that uses cryptographic protections creating a confidential computing environment that guarantees security and data privacy during data processing.

- On-device learning– Technology that employs an algorithm trained on historical data generated by actions such as consumer interest or conversion to then make predictions. The information is processed directly on the device, with no individual information sent back to the server.

- Differential Privacy (DP) – Differential Privacy is an approach to analyze a data set which through math, provides a formal privacy guarantee by controlling the amount of privacy loss. DP is a property of an algorithm so it can be applied uniformly to different data sets thus protecting individual identity from being reconstructed or reidentified. DP can be, and is often, combined with other PETs as part of an overall approach.

- Aggregation/K-anonymity – A technique where data is aggregated to a minimum privacy threshold, ensuring that at least a minimum number of data points with identifiers removed- known as ‘k’- are included in the result.

- Federated Learning (FL)- A machine learning technique that enables models to be trained on decentralized data across multiple parties, without exchanging any information.

These techniques form the foundation of solutions, but should not be the end goal in themselves, as Dennis Buchheim, VP, Advertising Ecosystem, Meta explained, “Many different technologies are enabling PETs or are PETs, but it is important to step back and to understand what they solve for, what capabilities they have. These are not solutions by themselves, but are instead foundational technologies and capabilities that enable solutions.”

3. How to evaluate PETs

With so many techniques available, it is important to spend some time considering which PETs, or combination of PETs, is the right fit for the use case.

Find the right PETs for the use case

When evaluating different PETs solutions, the first step is to consider the use case to select the PETs that are the most relevant for that specific use case. This creates clarity about what protections can be put in place and the minimum amount of data that can be used to satisfy that use case. The goal is to find a solution that can maximize privacy and utility for any given use case, finding a balance and being aware of how the balance will shift over time.

Consider the utility/privacy trade off

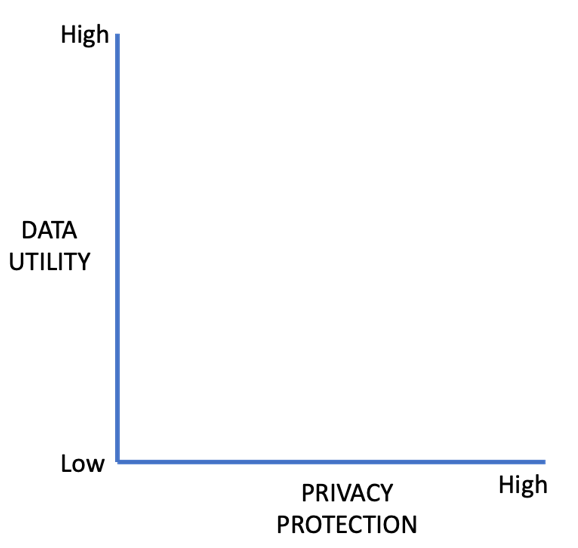

The below graph (presented by Meta) illustrates the balance between privacy and utility that needs to be taken into account when evaluating PETs solutions. In one extreme it is possible to have perfect privacy with no utility, versus the other extreme of no privacy and perfect utility. Optimal solutions should consider both privacy and utility and increasingly, technological innovations will help us move up and to the right.

Data utility versus privacy protection axes

Evaluate the business impacts

As part of the evaluation of PETs, the impact of engaging these technologies on the business must be considered. Factors such as the time it takes to implement the technology and the cost of infrastructure and human resourcing will play a large role in assessing PETs. Though the cost of computation is expected to go down over time, often the size of data that needs to be handled is enormous this can create a high infrastructure cost which needs to be considered at the evaluation stage.

4. Deep dives into some key techniques used by PETs

Here, two techniques, differential privacy and federated learning, are explored in more detail to illustrate how technology and math can create PETs.

Differential Privacy (DP)

Differential Privacy, through math, provides a formal privacy guarantee by controlling the amount of privacy loss. The mathematical definition of DP is that a random function k will provide guaranteed ε-differential privacy over two adjacent data sets, even when the two adjacent sets are different in just one record. In other words, differential privacy provides a mechanism (i.e., the function of k) of adding statistically calibrated noise which stabilizes the data to protect rows of data from re-identification within the privacy budget controlled by ε (epsilon).

In terms of where DP can be implemented, it is important to remember DP is the property of the algorithm and can be done in different places (such as on device or on server), depending on use cases and requirements.

Types of DP

- Pure DP: where the δ (delta) is smaller or near 0. Adjusting the ε (epsilon) from 0 to 1 gives a range of privacy preserving outcomes where 0 is 100% private and 1 is not private at all. More frequently uses the Laplace distribution, which creates stronger privacy but loses the understanding of what the data is.

- Approximate DP: the δ (delta) is larger, meaning there is more difference between the two data sets, so it needs more work to preserve the accuracy of the outcomes and balancing the inferences that come out of it. Considered worse for privacy but higher in accuracy. More frequently uses the Gaussian distribution, which has smaller variance and less privacy.

It is important, therefore, to pay close attention to the data and apply data sensitivity. For example, in Multi-touch Attribution, some attributes are considered sensitive, and some are not. In this case, sensitive attributes will go through the Laplacian distribution for noise, and those that are not sensitive will go through the Gaussian distribution.

Finally, be aware that DP is not the panacea to keep data anonymous, DP is one of the ways available to protect privacy of users but also should be done with other data governance and security measures e.g., encryption.

Federated Learning (FL)

Federated Learning (FL) is a machine learning product. To understand FL, first it is important to define standard supervised machine learning. In standard machine learning, there is a centralized fixed dataset (such as on a cloud server) which is made of attributes associated with individuals in labels. Based on this dataset, offline training of the machine learning algorithms is performed to create online predictions about a new user.

However, in the real world, data is not held in a centralized data set, rather across many different parties such as advertisers, browsers or publishers so standard machine learning cannot be applied. It is not an option to learn locally on 1st party data only, as firstly, there is not enough data to make good predictions on unseen users, and secondly local data can be biased such as trying to predict, for example, French users on a dataset of American users. Likewise, centralizing data is not possible as data collection is too costly, the data too sensitive and data breaches might occur.

Centralized Federated Learning is one solution to these bottlenecks. In this case, a centralized trusted server performs collaborative learning with all the parties which have access to local data. This allows for standard machine learning training to be performed without having to centralize all data, but instead by each party sharing some machine learning algorithms/models, or gradients etc back to the centralized server.

There are some challenges to FL. As an example, just like other PETs, there is a privacy/utility trade off as FL only ensures first order privacy guarantee by not sharing data. This can be substantial because the model parameters that are exchanged with servers and other parties are based on local data and might implicitly leak some information about the data. To alleviate this, other PETs such as DP, MPC, TEEs etc can be applied to protect user privacy.

Overall though, FL may help with privacy regulation compliance as raw data is not being sent to a single place, which helps minimize the risk of data breaches. FL should be considered a building block of a privacy strategy that can be enhanced by using other PETs concurrently.

5. Example PETs solutions and proposals in market today

A handful of core players in the industry have employed some of the above techniques to put some specific PETs proposals forward. These include Google’s Privacy Sandbox, Mozilla and Meta’s Interoperable Private Attribution (IPA) and Apple’s Private Click Measurement (PCM). All of which are at the feedback stage through forums such as the W3C Private Advertising Technology Community Group, IAB Tech Lab PETs working group, and others. In addition to these proposals, there are already other PET solutions that are in-market and ready to use today.

This section drills down in more detail to cover one example of a PETs proposal, Google’s Privacy Sandbox, and three examples of PETs solutions that are in market today, Meta’s Private Lift and Private Attribution and Magnite’s SSP.

Example PETs proposal: Google Privacy Sandbox

Privacy Sandbox Roadmap

The Privacy Sandbox is currently in technical testing mode, with the ads API currently exposed to 1% of chrome stable traffic. The current focus is on working with industry testers to test isolated features and improve developer experience.

The next phase will be performance testing mode where chrome traffic will be gradually expanded and technology that is designed to support advertising use cases will be made generally available to do testing at an industry level.

How privacy approaches are evaluated and applied in the Privacy Sandbox

Google’s goal for the Privacy Sandbox is to limit cross site tracking, but to allow a controlled amount of cross site information to be shared to enable critical use cases to power the open web.

With this in mind, it can be helpful to consider each privacy approach in two parts. Firstly, understand the boundaries that are being established i.e., where is cross site data being shared? Secondly clarify the privacy definition / guarantees that are being enforced at those boundaries, i.e., how much or quickly data is passing across those boundaries? By breaking out these two different aspects, a broader range of solutions become available.

An example of how this is applied in the Privacy Sandbox is in the case of FLEDGE (a proposal for on-device ad auctions to serve remarketing and custom audiences, without the need for cross-site third-party tracking). FLEDGE provides an interesting scenario of how to enforce a boundary without having to do everything on the device. In the FLEDGE example, when deciding whether to serve an ad from a campaign it is important to know if the campaign still has budget. In theory campaign budgets could be pushed to every device in the world, but this is not practical, as there would be large volumes of data that is not used creating huge inefficiencies. To avoid this, the FLEDGE proposal does not do everything on the device, and instead extends the boundary out further to the key value server. This means real time information can be pulled into the auction, such as campaign budgets, but it does not allow anything out. This creates efficiencies in the data sent to devices by not pushing everything to device when it does not make sense from a computational point of view.

Call for feedback to the Privacy Sandbox

Google is currently seeking feedback on the Privacy Sandbox APIs, with a key focus making sure the tools are usable. There are also long-term projects to evaluate the taxonomy and continue to develop this based on feedback. As Christina Ilvento, Privacy Sandbox, Google said,” Now is an excellent time to be providing feedback that influences the shape of the APIs, such as feedback on the interfaces themselves, tools, developer ergonomics, this is all helpful feedback to us right now….and will help to shape how the API structure evolves over time.”

Example PETs solutions in market: MPC at Meta and Magnite

This section explores how MPC technology is powering privacy safe tools that are already in the market, taking examples from Meta and Magnite.

Magnite

As an SSP, Magnite leverages MPC to support activation in a way that does not require Magnite to see the raw data to achieve outcomes. Advertisers and publishers encrypt data such as 1st party publisher lists or advertiser customer lists using MPC. This encrypted data is then delivered to Magnite, who can match and create synthetic stable IDs out of that data which can then be put into deals to achieve activation out of that data.

Meta

Meta started with a small team to build Private Computation which has grown into a large ads initiative, focused on building with privacy as a first principle, creating a new stack which is fully open source from the start.

This group decided to focus first on measurement as it is foundational – optimization requires measurement to function. From this, two products were created that are in beta today, Private Lift and Private Attribution.

Taking Private Lift as an example, Meta leverages various privacy techniques through the following three step process:

- Private records linkage: private records are generated from records held by the advertiser and by Meta.

- Private attribution records: private records have attribution rules applied to them using MPC, to create private attributed records.

- Private aggregation: attributed records are aggregated and anonymized – this can leverage DP or K-anonymity.

6. How are PETs being received by the industry?

Broadly, advertisers are interested in the opportunities PETs present, and understand that in the long-term PETs will play an important role in the advertising industry, as one private computation advertiser said, “I see this as the future of all our ad platforms”.

However, marketers are often focused on internal business goals and may not yet feel a sense of urgency in adopting PETs. Therefore, there is a feeling that the rest of the industry must approach this very collaboratively, investing where needed and educating to keep up the momentum in creating solutions that lower the barrier to entry and can be easily used.

7. The role of data clean rooms

What are data clean rooms?

Data clean rooms are decentralized environments that enable two or more parties to process their sets of data, often jointly, in a secure and purpose-limited way. Though slightly different technologies, there are a lot of adjacencies between data clean rooms and PETs and they are often used together as part of an overall privacy solution.

There are several different types of data clean rooms, and different types will be relevant for different use cases. To summarize the main types found:

- Single party centralized clean room: where data from just one party goes in and can be connected with other data sets.

- Multi party centralized clean room: where data is taken from multiple partners both inside and outside of an organization and centralized.

- Decentralized/agnostic clean room: data is connected in an independent, trusted environment. No one party has advantage over another, and the data is not linked reducing the risk of data leakage.

How are advertisers using data clean rooms?

Advertisers can use data clean rooms in three ways:

- Generate insights between multiple data sets

- Planning and activation

- Measurement

For example, data clean rooms might connect data sets to help advertisers understand lift without sharing data, or in activation to help a buyer and a seller to work together to build a custom audience for that brand.

Challenges using data clean rooms

Challenges in interoperability. There are a multitude of data clean rooms available which each have their own data sharing mechanisms that their clients must work with. This means, that without standards, the organizations using data clean rooms must adapt for each technology they engage with, and sometimes these clean rooms are not compatible with each other. This is challenging in terms of time and resource, as Nick Illobre, SVP of Product Strategy, NBCUniversal said “As a publisher, if a client wants to partner with a new clean room, we have to stand everything up again. This sounds simple, but it can be nuanced, for example if a new partnership means a piece of data is newly exposed issues, can snowball beyond being a technical problem, as internal decisions now must be made about exposing that data point.”

Strategic and commercial mismatches. Being aware of commercial and strategic considerations is as important as the technical aspects. Though sometimes not technically difficult to push an audience from one platform to another, there are still challenges in terms of understanding the minimum level of privacy both parties are comfortable with. Likewise, there are commercial contexts for each provider, for example one clean room may own media and therefore only want to take data in but not let data out.

Data clean rooms are not a silver bullet. Ultimately, the data clean room is a piece of technology that cannot solve all privacy needs but rather works as part of a system of technologies and business needs. As Edik Mitelman, GM, Privacy Cloud, AppsFlyer said “Data clean rooms without the additional layer of PETs are essentially a database that can share two tables”, so they must be considered part of the stack working together with PETs to create an overall privacy safe approach.

Roadmap on data clean room standards

Through industry collaboration, innovation, and education it is hoped some of these challenges can be overcome. Standards are an enabler to make it easier for advertisers to use data clean rooms, and the first step to creating more efficient interoperability.

Data clean rooms are addressed in the IAB Tech Lab’s Rearc Addressability Working Group. A key 2022 deliverable of this group is the Data Clean Room Standards, a draft of which will be presented in December 2022. For more information on this group, please click here.

Key Takeaways

- Attribution and measurement are perceived as the low hanging fruits where PETs and clean rooms can make the most immediate impact, as Devon DeBlasio, VP, Product Marketing, InfoSum explained, “[Data clean rooms] can solve the most basic measurement questions such as ‘how do you measure lift?” without sharing sensitive data. Today it can be a risk to connect exposure data from a publisher with transaction data from a brand, so data clean rooms solve for those most basic measurement cases. At the moment we are focussed on chipping off ‘low hanging fruit’ use cases such as for planning, targeting, measurement and activation.”

- Avoiding cross site profiling of individuals is a central privacy principle which is being adopted by many leaders in the industry. Erik Taubeneck, Research Scientist, Cryptography and Internet Technology, Meta explained how Meta “aims to provide as much flexibility as possible whilst still preventing leakage of cross site information about individuals”, as a central design principle.

- There is consensus from the W3C that differential privacy is an appropriate way to measure the information coming out of a privacy system. This positions DP as a central technology and provides a way to guarantee privacy, therefore DP is very often found as part of overall privacy solutions.

- Federated learning offers a private way to apply machine learning in a decentralized data set, asMaxime Vono, Senior Research Scientist, Criteoexplained, “[FL] allows machine learning training without requiring centralization of parties’ data where the data collection is too costly, the data is too sensitive and data breaches might occur.”

- The utility of privacy solutions is a core focus, and something the industry needs to work together on and to define better to help those developing PETs. Participating in the feedback loops facilitated by the IAB Tech Lab and W3C, or directly with the PETs developers themselves, will help ensure the solutions are usable in the short and long term.

- It is encouraging that advertisers want PETs to work for them, rather than avoiding PETs solutions entirely, as Ethan Lewis, Chief Technology Officer, Kochava, explained “Advertisers are excited and are moving forward. Though it is slow adoption and there is push back when campaign performance gets hit, the feedback from advertisers is ‘how can we maximize the utilization for these solutions?’ rather than wanting to avoid PETs.”

- As Google detailed in their approach in section 5, better defining boundaries is a useful concept for thinking about on device computing. Establishing boundaries and considering the privacy guarantees that are being enforced at that boundary helps to think about applying on device, what stays on device, and what can leave the device and in what shape and form.

- Experts recommend solving smaller problems first before addressing bigger issues as Andrei Lapets, VP, Engineering & Applied Cryptography, Magnite, said “There is a range of things MPC can be useful for- it doesn’t have to be far-reaching and consequential; it could also be used to address small [issues]. In my opinion, we can’t learn how to solve big problems without solving little problems first. This is the philosophy Magnite follows when looking for use cases for MPCs.”

- Investment is crucial for the future of the industry, despite some advertisers’ lack of urgency. James Reyes, Tech Lead, Private Computation, Meta explained that “Advertisers do not yet feel a sense of urgency. Advertisers’ primary objective is to drive sales and measure performance, so they are comparing MPC systems to non MPC systems and those other systems don’t have additional constraints. New regulations, competitors doing it, or more features/ accuracy are the cited reasons that an advertiser would increase their sense of urgency. But, I don’t think this should deter us, the interest and the momentum are there, so it just means it’s going to be a longer journey.” Andrei Lapets, VP, Engineering & Applied Cryptography, Magnite agreed with this and urges those that have the resources to invest, “Even though there is not a sense of urgency today, we’re not going to have the kinds of answers with the kinds of data that we need to make these large-stakes decisions later unless some of us invest today. So, if you are in a position to make that investment I would encourage you to try, and it will make the answers for yourself and everyone else better in the long term.”

ABOUT THE AUTHOR

Written by Alexandra Kozloff on behalf of Rachit Sharma

Rachit Sharma

Director, Product Management, Privacy Enhancing Technologies (PETs) Programs

IAB Tech Lab